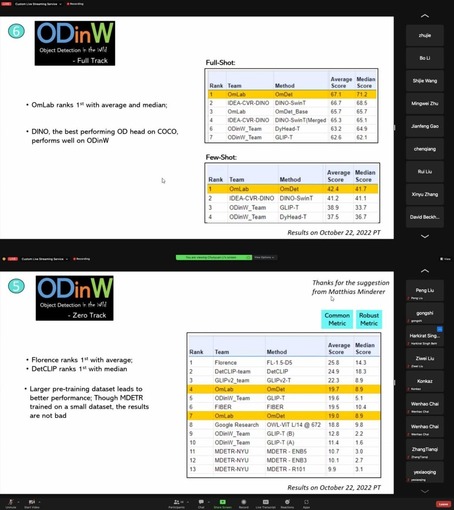

Recently, Dr. Zhao Tiancheng, the director of the Om Artificial Intelligence Research Center of the Binjiang Institute of Zhejiang University and chief scientist of Hangzhou Linker Technology, along with the OmLab team, received championships of the Full-Shot, the Few-Shot, and fourth place in the Zero-Shot competition in the ECCV 2022 ODinW Challenge. Dr. Zhao was invited to deliver a spotlight talk to introduce the advancement and innovation of the OmDet—— a new vision-language object detection framework.

ECCV (European Conference on Computer Vision) is one of the three most reputable global conferences in the field of computer vision. The ODinW (Object Detection in the Wild) Challenge, hosted by Microsoft Research, aims to advance the ability of computer vision AI for open-world and open setting visual recognition.

Dr. Zhao was invited to participate in the Spotlight Sessions and published the report OmDet: Language-Aware Object Detection with Large-Scale Vision-Language Multi-dataset Pre-training, which received a lot of attention due to the superior performance of the proposed OmDet method

Object Detection (OD) is an fundamental task in computer vision, widely used in intelligent video monitoring, industrial inspection, robotics, etc. AI vision gains its popularity in both academia and industry and meanwhile faces an increasing innovation difficulty. The classic OD research focused on improving detector networks to achieve higher accuracy and lower latency by using fixed output label sets (e.g., 80 categories in MSCOCO). OmDet, a new object detection framework based on VLP (Visual Language Pre-training), was proposed by the OmLab team. The contiual learning method investigates whether the detector can gradually learn from many OD datasets with an increasing visual vocabulary, and ultimately achieve open vocabulary detection abilities.

Tested in COCO, Pascal VOC, Wider Face, and Wider Pedestrian OD datasets, OmDet was proven not only to be able to learn from all datasets without label collisions but also has stronger performance than a single dataset detector due to its knowledge sharing between tasks.

Based on the previous results, the OmLab team conducted another research on larger vocabulary pre-training, using an OD dataset with 20 million images and 4 million unique text labels, which includes manual labeling and pesudo labeling. The resulting model is evaluated on the recently proposed ODinW dataset, which covers 35 different OD tasks in various fields.

The research shows that zero/few-sample learning and parameter efficiency can be improved effectively by expanding vocabulary through multi-dataset pre-training.

OmDet achieves state-of-the-art performance on a series of downstream tasks. In the future, OmDet can be improved with effective task sampling strategies and diversified multimodal datasets. Improve its multilingual understanding abilities and prompt tuning performance are also on the research agenda.

More information on

https://computer-vision-in-the-wild.github.io/eccv-2022/

Contact Info:

Company Name: Hangzhou Linker Technology Co., LTD

Contact Person: Giraffe

Telephone: 0571—88390065

Email: [email protected]

City: Hangzhou

Country: CHINA

Website: http://www.hzlh.com/

Source: Story.KISSPR.com

Release ID: 456763